AdaBoost Algorithm in Machine Learning

What is AdaBoost?

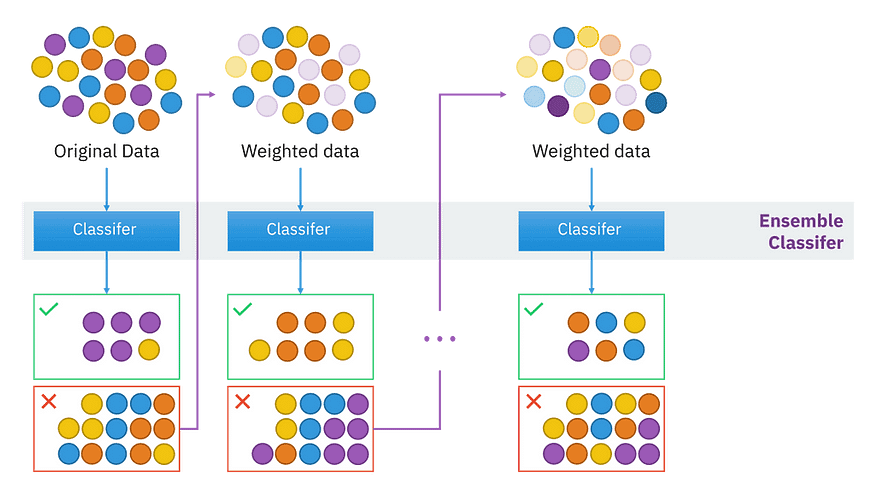

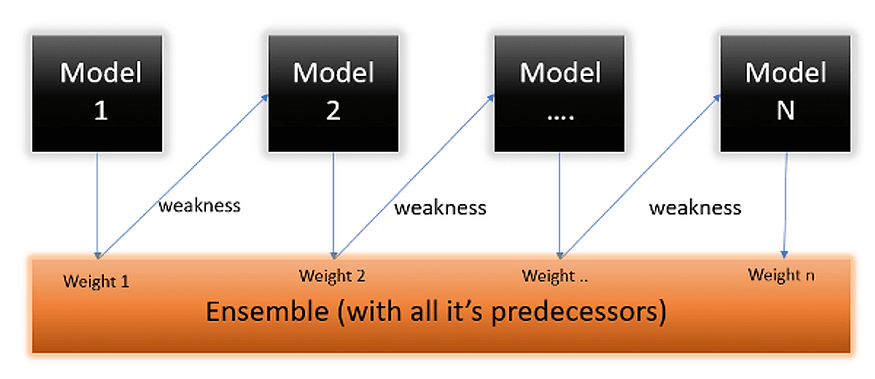

AdaBoost, short for Adaptive Boosting, is an ensemble machine learning algorithm that can be used in a wide variety of classification and regression tasks. It is a supervised learning algorithm that is used to classify data by combining multiple weak or base learners (e.g., decision trees) into a strong learner. AdaBoost works by weighting the instances in the training dataset based on the accuracy of previous classifications.

Freund and Schapire first presented boosting as an ensemble modeling approach in 1997. Boosting has now become a popular strategy for dealing with binary classification issues. These algorithms boost prediction power by transforming a large number of weak learners into strong learners.

Boosting algorithms work on the idea of first building a model on the training dataset and then building a second model to correct the faults in the first model. This technique is repeated until the mistakes are reduced and the dataset is accurately predicted. Boosting algorithms function similarly in that they combine numerous models (weak learners) to produce the final result (strong learners).

There are three kinds of boosting algorithms:

- The AdaBoost algorithm is used.

- Gradient descent algorithm

- Xtreme gradient descent algorithm

Let’s understand “What is the AdaBoost algorithm?”

What is the AdaBoost Algorithm in Machine Learning?

There are several machine learning algorithms from which to choose for your issue statements. AdaBoost in machine learning is one of these predictive modeling techniques. AdaBoost, also known as Adaptive Boosting, is a machine-learning approach that is utilized as an Ensemble Method.

AdaBoost’s most commonly used estimator is decision trees with one level, which is decision trees with just one split. These trees are often referred to as Decision Stumps.

This approach constructs a model and assigns equal weights to all data points. It then applies larger weights to incorrectly categorized points. In the following model, all points with greater weights are given more weight. It will continue to train models until a smaller error is returned.

To illustrate, imagine you created a decision tree algorithm using the Titanic dataset and obtained an accuracy of 80%. Following that, you use a new method and assess the accuracy, which is 75% for KNN and 70% for Linear Regression.

When we develop a new model on the same dataset, the accuracy varies. What if we combine all of these algorithms to create the final prediction? Using the average of the outcomes from various models will yield more accurate results. In this method, we can improve prediction power.

Understanding the Working of the AdaBoost Classifier Algorithm

Step 1:

The image below represents the AdaBoost algorithm example or Adaboost example by taking the below dataset. It is a classification challenge since the target column is binary. First and foremost, these data points will be weighted. At first, all of the weights will be equal.

The sample weights are calculated using the following formula:

N denotes the total number of data points.

Because we have 5 data points, the sample weights will be 1/5.

Step 2:

We will examine how well “Gender” classifies the samples, followed by how the variables (Age and Income) categorize the samples. We’ll make a decision stump for each characteristic and then compute each tree’s Gini Index. Our first stump will be the tree with the lowest Gini Index.

Let’s suppose Gender has the lowest gini index in our dataset, thus it will be our first stump.

Step 3:

Using this approach, we will now determine the “Amount of Say” or “Importance” or “Influence” for this classifier in categorizing the data points:

The total error is just the sum of all misclassified data points’ sample weights.

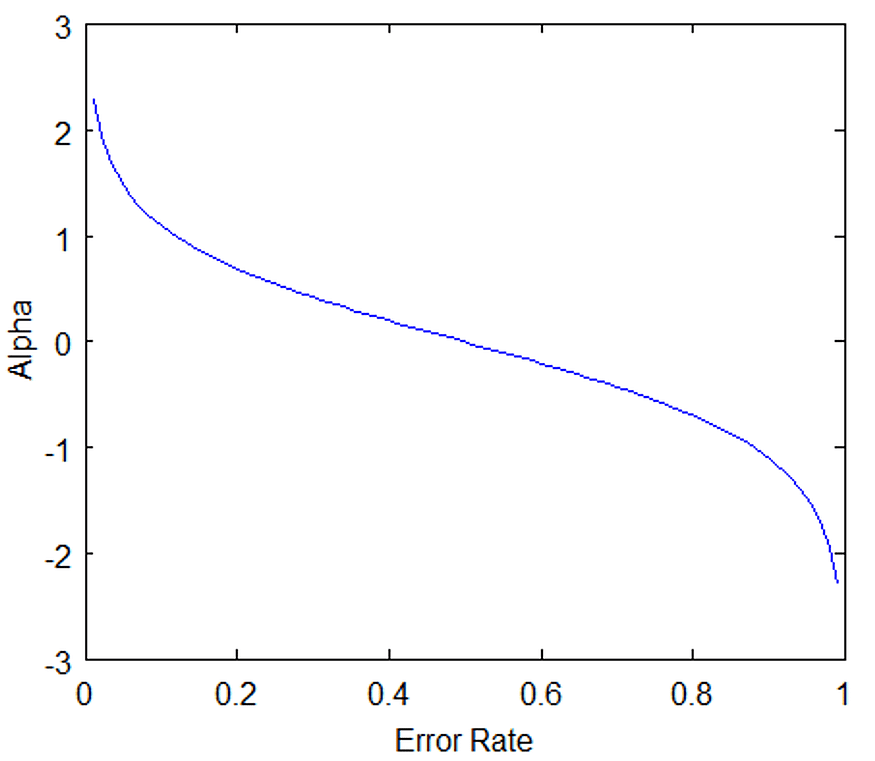

If there is one incorrect output in our dataset, thus our total error is 1/5, and the alpha (performance of the stump) is:

0 represents a flawless stump, while 1 represents a bad stump.

- According to the graph above, when there is no misclassification, there is no error (Total Error = 0), hence the “amount of say (alpha)” will be a huge value.

- When the classifier predicts half correctly and half incorrectly, the Total Error equals 0.5, and the classifier’s significance (amount of say) equals 0.

- If all of the samples were improperly categorized, the error will be quite large (about 1), and our alpha value will be a negative integer.

Step 4:

You’re probably asking why it’s required to determine a stump’s TE and performance. The answer is simple: we need to update the weights since if the same weights are used in the next model, the result will be the same as it was in the previous model.

The weights of the incorrect forecasts will be increased, while the weights of the successful predictions will be dropped. When we create our next model after updating the weights, we will assign greater weight to the points with higher weights.

After determining the classifier’s significance and total error, we must update the weights using the following formula:

- When the sample is successfully identified, the amount of, say, (alpha) will be negative.

- When the sample is misclassified, the amount of (alpha) will be positive.

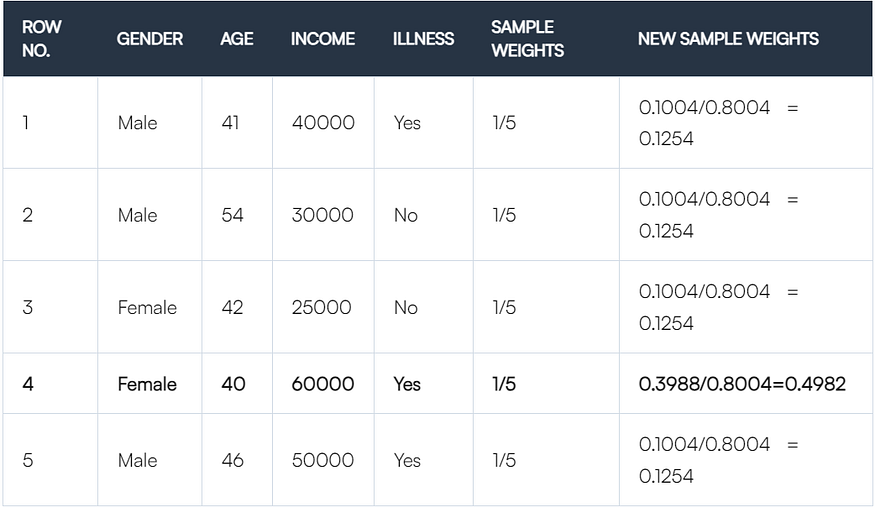

- There are four correctly categorized samples and one incorrectly classified sample. In this case, the sample weight of the datapoint is 1/5, and the quantity of say/performance of the Gender stump is 0.69.

The following are new weights for correctly identified samples:

The adjusted weights for incorrectly categorized samples will be:

We know that the entire sum of the sample weights must equal one, but if we add all of the new sample weights together, we get 0.8004. To get this amount equal to 1, we will normalize these weights by dividing all the weights by the entire sum of updated weights, which is 0.8004. Hence, we get this dataset after normalizing the sample weights, and the sum is now equal to 1.

Step 5:

We must now create a fresh dataset to see whether or not the mistakes have decreased. To do this, we will delete the “sample weights” and “new sample weights” columns and then split our data points into buckets based on the “new sample weights.”

Step 6:

We’re nearly there. The method now chooses random values ranging from 0 to 1. Because improperly categorized records have greater sample weights, the likelihood of picking them is relatively high.

Assume the five random integers chosen by our algorithm are 0.38,0.26,0.98,0.40,0.55.

Now we’ll examine where these random numbers go in the bucket and create our new dataset, which is displayed below.

This is our new dataset, and we can see that the data point that was incorrectly categorized has been picked three times since it has a greater weight.

Step 7:

This now serves as our new dataset, and we must repeat all of the preceding stages, i.e. Give each data point an equal weight. Determine the stump that best classifies the new group of samples by calculating their Gini index and picking the one with the lowest Gini index.

To update the prior sample weights, compute the “Amount of Say” and “Total error.” Normalize the newly calculated sample weights. Iterate through these procedures until a low training error is obtained.

Assume that we have built three decision trees (DT1, DT2, and DT3) sequentially about our dataset. If we transmit our test data now, it will go through all of the decision trees, and we will eventually find which class has the majority, and we will make predictions for our test dataset based on that.

Conclusion

AdaBoost is a powerful and widely used machine learning algorithm that has been successfully applied to classification and regression tasks in a wide variety of domains. It is an effective method for combining multiple weak or base learners into a single strong learner and has been shown to have good generalization performance.

Its ability to weight instances based on previous classifications makes it robust to noisy and imbalanced datasets, and it is computationally efficient and less prone to overfitting.

Key Takeaways

- Adaboost is an ensemble learning technique used to improve the predictive accuracy of any given model by combining multiple “weak” learners.

- Adaboost works by weighting incorrectly classified instances more heavily so that the subsequent weak learners focus more on the difficult cases.

- It is adaptive in the sense that subsequent weak learners are tweaked in favor of those instances misclassified by previous classifiers.

- Adaboost is fast, simple to implement, and versatile.

- It is highly effective in binary classification problems and can be used to solve multi-class classification problems.

- Adaboost is not suitable for noisy data and is sensitive to outliers.

Happy Learning!

ABOUT THE AUTHOR

Harshit Sanwal

Marketing Analyst, DataMantra